Proof is the essence of mathematics. Any mathematical result should be derived from first principles using a watertight chain of logical reasoning. Proof is what separates mathematics from other intellectual endeavours, and it's what makes it so elegant and pure.

Kevin Buzzard. Photo: Thomas Angus, Imperial College London.

It's a high standard that is diligently applied in undergraduate maths courses — but not in the higher echelons of mathematical research,Kevin Buzzard, Professor of Pure Mathematics at Imperial College, said at a recent seminar talk in Cambridge. There are proofs with holes, proofs with mistakes, and proofs that are only understood by one or two people in the entire world. Something having been published in an academic journal doesn't always mean it's true. To know for sure which results to believe you need to be part of an in-crowd with access to experts who make the consensus. "Things are a bit out of control," Buzzard says.

Buzzard is himself one of those experts. A professional research mathematician since 1998, he worked on some of the maths used in the proof of Fermat's famous last theorem during his PhD. His unease with the standard of proof in academic maths only crept up on him over recent years, perhaps part of a mathematical mid-life crisis, he says, causing him to re-evaluate how things are done in his chosen line of work.

What's the problem?

The problem isn't usually down to people purposefully trying to deceive. It's not uncommon, for example, for authors to refer to unpublished papers in their work, if they're confident the unpublished results are correct and will soon go through the peer-review process that happens before papers are accepted by a journal. Sometimes, however, the unpublished results never do appear. And as more people build on work that relied on the unchecked results, the fact that they are unchecked can recede into the background. This is something that happened, for example, to a well-known monograph by James Arthur which relied on four unpublished papers by Arthur himself. People are aware of the potential holes in the proof, but the consensus is that they're probably ok. Arthur has, deservedly, been awarded several major prizes recently for his contributions to mathematics, but this serves to support the impression that the results are 100% sound.

Errors can also be a problem. Everyone makes mistakes and sometimes these mistakes also escape the expert referees who decide whether a paper should be published — especially because referees don't always check results line by painful line, but aim to "convince themselves that the methods used are strong enough to prove the main result," as Buzzard puts it. When clear mistakes are found after publication, no self-respecting mathematician will refuse to acknowledge them. But the correction, or even retraction, then appears in the next issue of the journal as an erratum or editor's note. And how many people read through those? People in the area of expertise the results belong to would know, of course, but outsiders wouldn't.

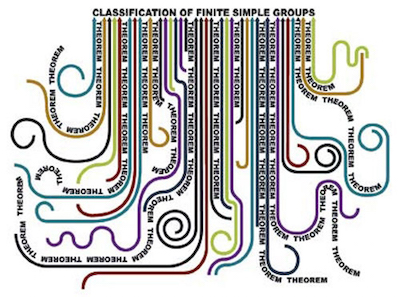

Proofs of theorem can be so long and complex that only a few people in the world understand them.

Perhaps the most spectacular source of errors or holes comes in proofs that are so long and complex that only a few people in the world understand them. A famous example is something called the classification of finite simple groups: a triumph of twentieth-century mathematics which, as the name suggests, involves classifying each of an infinite number of mathematical objects into families. The first version of the proof that the classification was correct and complete was announced in 1983 and ran to over 10,000 pages, spread across 500 journal articles, by over 100 different authors. There turned out to be a problem with this first proof, which took nine years and another paper of over 1,000 pages to fix.

Given this enormous complexity, the main authors promised to come up with a "simpler" second generation version, to be published in twelve volumes (find out more here). As of 2019, only seven of these volumes have appeared. The complete proof was probably only ever understood by very few people — none of whom are getting any younger. Mathematicians agree that the classification of finite simple groups is indeed complete and that someone trailing through the huge amount of available literature should be able to piece it together. But how many people have the time (and the brains) to do that?

Buzzard believes that these kinds of problems undermine pure maths to such an extent that it's slid into a crisis. But what's to be done? Mathematics is a creative subject, not a procedural one, and mathematicians are humans. They like to work in groups and don't much enjoy being bogged down by details. Asking them to always stick to due process is asking them to work like machines.

The solution?

But this, Buzzard suggests, is where the solution lies: not asking mathematicians to work like machines, but asking them to use machines. Computer scientists and mathematicians form two related tribes, but with essential differences: "Computer scientists fix bugs, while we ignore them," as Buzzard puts it. Computer scientists, some of whom frequently wander among mathematicians, have developed theorem proving software such as LEAN and Isabelle. Such software won't magically find proofs of difficult results that have eluded people for centuries — human mathematicians are still very much needed — but it can help you check your proofs are sound. When given a proof phrased in a code it can understand the software will check that all logical steps are valid and/or based on previously established results and accepted axioms of mathematics. If there's an error, the machine will let you know. And if you've left a hole in your proof to come back to later, the machine won't let you forget.

As of yet, computers are unable to do proper mathematics. But Buzzard thinks that they can still be of great help in checking theorems.

A computer scientist, says Buzzard, could argue that a result isn't proven until it has been formally checked by theorem proving software. This means that many monumental results in maths, including Fermat's last theorem or the classification of finite simple groups, to a computer scientist's mind could still be checked more carefully.

Buzzard is aware that turning their proofs into the code the software can understand would involve a phenomenal effort — for Fermat's last theorem, Buzzard estimates it would cost around a 100 million pounds — but he is suggesting that at least we could teach budding mathematicians to embrace the approach. His undergraduates at Imperial College are learning how to use theorem proving software and encouraged to apply it to their results. If mathematicians get into the habit of doing this, while others work on formally checking existing results, then maths could be brought back onto the right track.

One fear that surrounds the use of computers in mathematics is that true understanding might be lost. If computers do our job for us, and if they do it in a way our inferior data processing ability doesn't allow us to follow, then we can't claim to understand the results that pop out. But Buzzard isn't advocating black box algorithms as they are used, for example, in machine learning. Rather than making proofs inaccessible to humans, he would like to make them accessible to computer programmes which can check them with great reliability. Those programmes aren't infallible of course, but they have fewer bugs than humans do.

Nothing will be lost, Buzzard thinks, and mathematics only stands to gain.

By

댓글 없음:

댓글 쓰기